About

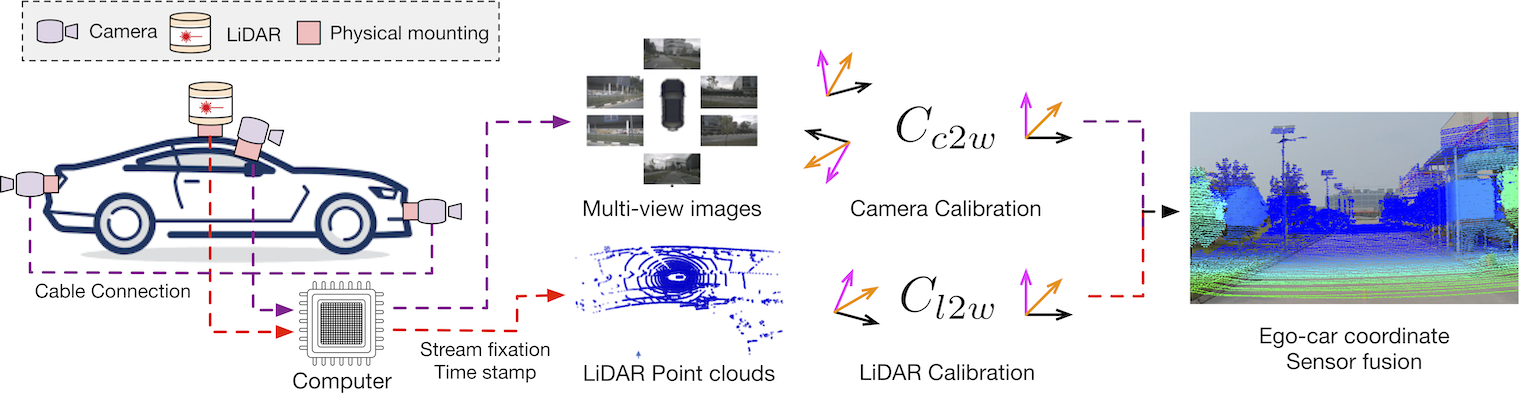

There are two critical sensors for 3D perception in autonomous driving, the camera and the LiDAR. The camera provides rich semantic information such as color, texture, and the LiDAR reflects the 3D shape and locations of surrounding objects. People discover that fusing these two modalities can significantly boost the performance of 3D perception models as each modality has complementary information to the other. However, we observe that current datasets are captured from expensive vehicles that are explicitly designed for data collection purposes, and cannot truly reflect the realistic data distribution due to various reasons.

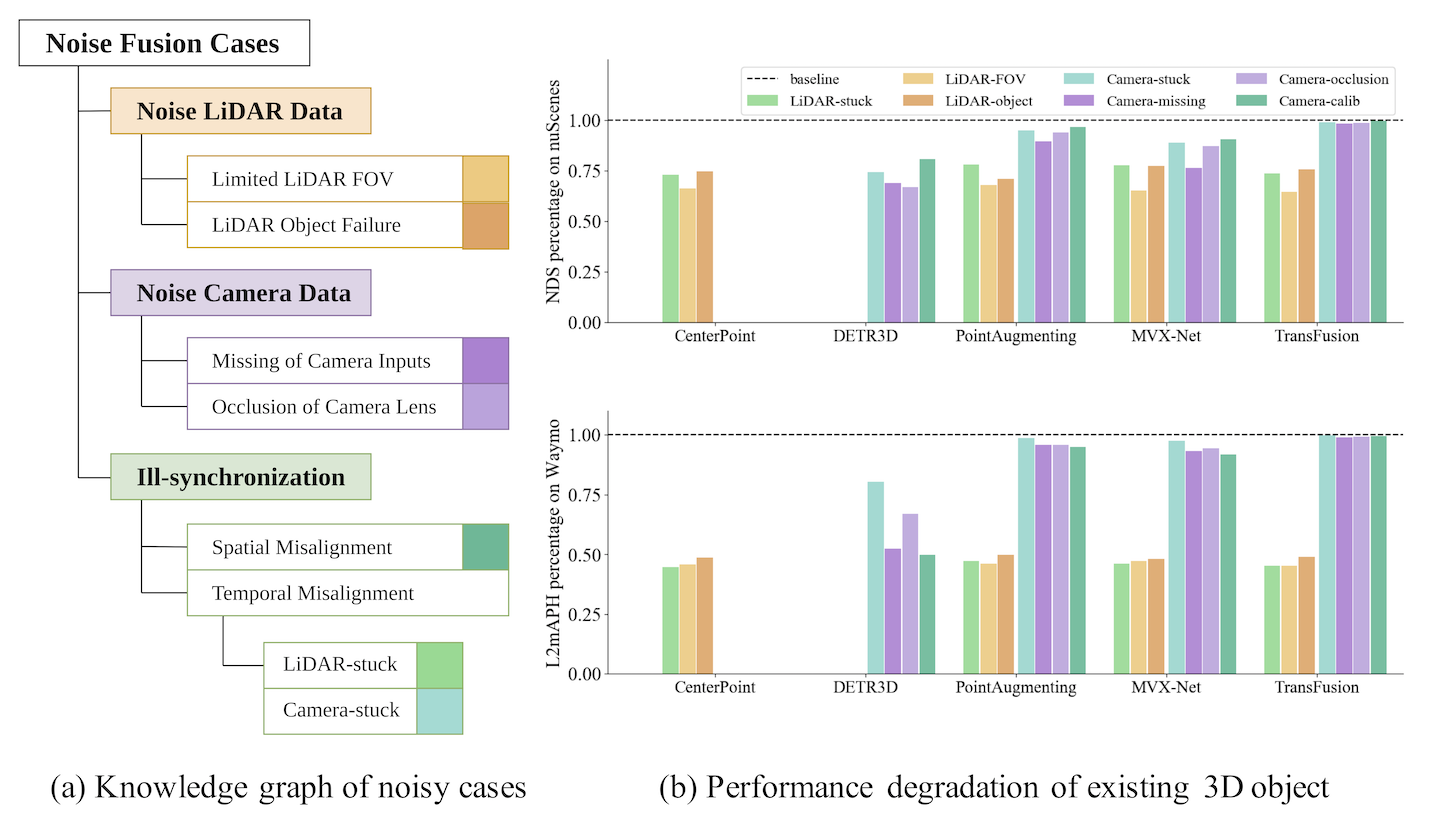

To this end, we collect a series of real-world cases with noisy data distribution (see in Data Examples), and systematically formulate a robustness benchmark toolkit (see in Data Toolkits), that simulates these cases on any clean autonomous driving datasets. We showcase the effectiveness of our toolkit by establishing the robustness benchmark on two widely-adopted autonomous driving datasets, nuScenes and Waymo, then, to the best of our knowledge, holistically benchmark the state-of-the-art fusion methods for the first time (see in Benchmark). We observe that: i) most fusion methods, when solely developed on these data, tend to fail inevitably when there is a disruption to the LiDAR input; ii) the improvement of the camera input is significantly inferior to the LiDAR one.

We argue that an ideal goal of a fusion framework is when there is only a single modality sensor failure, the performance should not be worse than the method works on the other modality. Otherwise, the current fusion methods should be replaced by using two separate networks for each modality and performing fusion by post-processing steps. We hope our work can shed light on developing robust fusion method that can be truly deployed to the autonomous vehicle.

If you have any questions, please contact 107057210+anonymous-benchmark@users.noreply.github.com for further help.

Benchmark

Autonomous driving perception system with camera and LiDAR sensors.

Benchmarking the robustness of state-of-the-art 3D detection methods.

Annoucement

- The robust dataset toolkit has been released!

Citation

@misc{anonymous2022Robustness,

title={Benchmarking the Robustness of LiDAR-Camera Fusion for 3D Object Detection},

author={Anonymous Author(s)},

year={2022},

}